LLM Interviews : Prompt Engineering

- I’ll use any resource that helps me to prepare faster.

- I’m not directly working for interviews, but preparing for them makes me learn so many stuff, for years(check main page of website for 8 yo youtube channel).

- I’ll cite every content I use.

- I’ll agressively reference anything I believe explained things better than me.

Prompt Engineering & Basics of LLM

- Prompt Engineering & Basics of LLM

- 1. What is the difference between Predictive/Discriminative AI and Generative AI?

- 2. What is a token in the language model?

- 3. How to estimate the cost of running SaaS-based and Open Source LLM models?

- 4. Explain the Temperature parameter and how to set it.

- 5. Explain the basic structure of prompt engineering

- 6. Explain in-context learning

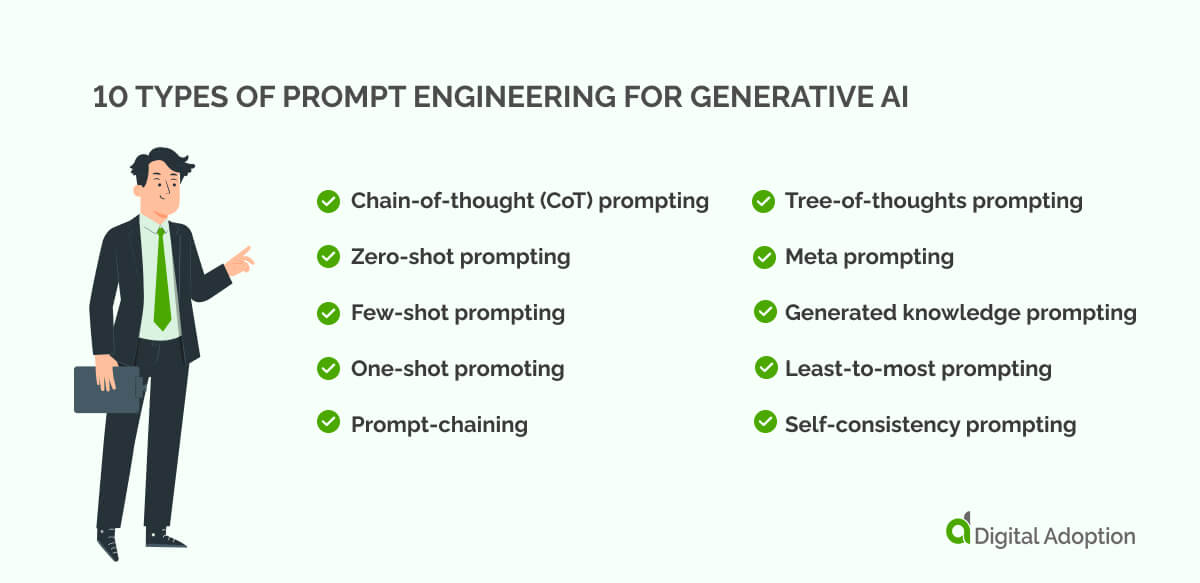

- 7. Explain types of prompt engineering

- 8. What is hallucination, and how can it be controlled using prompt engineering?

- 9. What are some aspects to keep in mind while using few-shot prompting?

- 10. What are certain strategies to write good prompts?

- 11. How to improve the reasoning ability of LLM through prompt engineering?

- 12. How to improve LLM reasoning if your Chain-of-Thought (COT) prompt fails?

1. What is the difference between Predictive/Discriminative AI and Generative AI?

Predictive/discriminative AI generally works on a non-conversational areas, where domain experts would include CNN, tree-based algorithms, NER models, that makes predictions, recommendations and decisions, and forecasting and so on..

On the other hand, Generative AI creates content, code, music and marketing material and can translate data into different formats.

Earlier examples of generative AI models were variational encoders, GANs, like this and this which were amazed me SO MUCH at that time.

With the rise of transformer models, we have seen examples of code generation, music generation, video-to-video transformations, text-to-video generations, and LLMs.

|

|---|

| Resource from Amazing ML Mastery Blog, who taughted millions, kudos to them |

- High resource usage

- Hallucinations

- Still trying to make algorithms more stable, it took years to come from GANs to these days

- Difficult to remove sensitive data/content filtering

2. What is a token in the language model?

Token is the piece of minimum/sufficiently information-encapsulating representation of the data to be mimicked. It doesn’t have to be necessarily be a syllable, rather it can be a piece of image vector.

Based on the size of the training data, the number of tokens can number in the billions or trillions — and, per the pretraining scaling law, the more tokens used for training, the better the quality of the AI model.

Side Note on Number of Tokens in Famous Models

According to the Chinchilla scaling law and related research, the optimal number of training tokens is roughly 20 times the number of model parameters. For example:

- A 5 billion parameter model is trained on about 1 trillion tokens.

- A 9 billion parameter model is trained on about 2.1 trillion tokens.

- A 3 billion parameter model is trained on about 2.8 trillion tokens.

- A 6 billion parameter model is trained on over 6.1 trillion tokens.

- Larger models with tens of billions of parameters may be trained on tens of trillions of tokens (e.g., 20B parameters on 60+ trillion tokens) Great Resource on this and another

-

|

|---|

| Resource from Eden AI, LLM Billing |

In the context of LLM models, they are trained to predict the next token in a correct way.

“The cat is beautiful.” is generated by LLMs as:

| Token ID | Token | Notes |

|---|---|---|

| 1 | The | Article |

| 32 | Ġcat | Word with space prefix |

| 542 | Ġis | Common verb with space |

| 123 | Ġbeautiful | Adjective with space |

| 13 | . | Punctuation |

At each step, it predicts the next word (or token) based on the previous ones:

| Input Tokens | Text So Far | What Comes Next? |

|---|---|---|

[1] |

“The” | What is the next word? |

[1, 32] |

“The cat” | What is the next word? |

[1, 32, 542] |

“The cat is” | What is the next word? |

[1, 32, 542, 123] |

“The cat is beautiful” | What is the next word? |

[1, 32, 542, 123, 13] |

“The cat is beautiful.” | What is the next word? I think I should stop. |

Tokens are also used as very first metrics to consider for LLM deployments. Especially deciding on GPU for same LLM model, or on same GPU when you want to decide smaller (1.7B) or larger (70B) model, token-based metrics are very useful, aside from the semantic performance/evaluation of the models.

| Metric | Description | When It Matters |

|---|---|---|

| Time to First Token (TTFT) | Time from sending the prompt to receiving the first token. | 🧠 Important for interactive apps, chatbots, coding assistants, low-latency UIs |

| Tokens per Second (TPS) | Speed at which the model generates tokens after the first one. | 📊 Crucial for batch processing, summarization, and longer outputs |

| Prompt Length (Input Tokens) | Number of tokens in the input. Affects memory and context cost. | 🧾 Key for choosing context window size, managing VRAM usage |

| Output Length (Generated Tokens) | Number of tokens the model needs to generate. | 🎯 Used to estimate latency, throughput, and inference cost |

| Total Tokens (Input + Output) | Combined token count per request. Used for throughput and cost estimation. | 💸 Necessary for pricing, rate-limiting, and SLA planning |

3. How to estimate the cost of running SaaS-based and Open Source LLM models?

This is a very good question to distinguish people who deploys/decides to deploy LLM models. Our purpose as a team was to evaluate whether we should deploy model on GPUs (like runpod, Lambda Labs, Modal, or AWS Bedrock, Google Cloud Vertex, Azure) or use public SaaS-based APIs (such as OpenAI, Anthropic, Cohere, or Mistral API).

I don’t do namedropping (branddropping) over here rather I’m trying to place names to use during interviews. I wish they hire me, I don’t earn money thru this GitHub hosted website I swear :) .

Let’s go with some example.

Let’s say we have an AI app, we estimate 100,000 api request in a month, on weekends demand/traffic is not high. We expect peak requests during afternoon. Our app reads 3-pages long pdf and returns a summary of it which is generally 250 words.

You should read this

3.1 SaaS-based Models

API Pricings are mostly fixed to

- per model pricing (intelligent/bigger the model, cost is higher)

- Input/Output token based pricing (for each model, we have input/output token cost)

Let’s go with OpenAI pricing (15 May 2025):

| Model | Description | Input (1M tokens) | Cached Input (1M tokens) | Output (1M tokens) |

|---|---|---|---|---|

| GPT-4.1 | Smartest model for complex tasks | $2.00 | $0.50 | $8.00 |

| GPT-4.1 Mini | Affordable model balancing speed & intelligence | $0.40 | $0.10 | $1.60 |

| GPT-4.1 Nano | Fastest, most cost-effective model for low-latency tasks | $0.100 | $0.025 | $0.400 |

We’ve experimented that our task is sufficient to be done with GPT-4.1 Mini, so we go with it.

Since scaling is not a deal for us, rather it’s solved by OpenAI(still we will face problems).

- A 3-page long PDF should be 500 * 3 = 1500 words.

- 1 word is approximately 0.75 tokens (source).

- This means we’ll have:

1500 words * 0.75 tokens/word = 1125 Input tokens per request.

-

On average, 250 words per output means:

250 words * 0.75 tokens/word = 187.5 Output tokens. - Input Tokens:

1125 tokens * 100,000 requests * $0.4 / 1 million tokens = $45 - Output Tokens:

187.5 tokens * 100,000 requests * $1.60 / 1 million tokens = $30

$45.00 (Input) + $1.875 (Output) = $75

However, OpenAI and any API provider can have down time and they all have rate limits, so you should consider to add another API provider as a backup in case api request fails.

For example, in our apps we have hit to the 60 request per min limit frequently (x-ratelimit-limit-requests). In order to solve that:

- Either you can use batch processing, or delay the requests in codebase if response time is not critical

- Use another LLM api as a backup.

| FIELD | SAMPLE VALUE | DESCRIPTION |

|---|---|---|

x-ratelimit-limit-requests |

60 | Maximum number of requests allowed before exhausting the rate limit. |

x-ratelimit-limit-tokens |

150000 | Maximum number of tokens allowed before exhausting the rate limit. |

x-ratelimit-remaining-requests |

59 | Remaining number of requests allowed before exhausting the rate limit. |

x-ratelimit-remaining-tokens |

149984 | Remaining number of tokens allowed before exhausting the rate limit. |

x-ratelimit-reset-requests |

1s | Time remaining until the request rate limit resets. |

x-ratelimit-reset-tokens |

6m0s | Time remaining until the token rate limit resets. |

But it’s obvious $70 is very cheap than the deployment of LLM models.

3.2 Open Source Model Deployment

Things get crazy in here. Let’s discuss why you may need to choose Open Source models:

- 🛡️ In defense sector, companies don’t want to use public API.

- 🧮 You may use LLMs to categorize DB rows consistently/constantly, and you have millions of records.

- 🧠 OpenAI may not fix your problem, you may want to fine-tune a better model.

- 🌐 In remote locations or areas with limited connectivity, open-source models can be deployed locally, reducing reliance on the internet.

- 📍 Data sovereignity, you may need to keep data in specific geographical regions to comply with legal or internal policies.

Let’s assume you’ve found your model. And looking for a GPU to be used first.

Before that, let’s estimate our peak usage:

- 📈 100,000 api request in a month

- 💤 On weekends demand/traffic is not high.

- ☀️ We expect peak requests during afternoon.

So:

- You assume in weekdays, you’ll have %80 of the traffic (rather than 5 weekdays/7 days = 0.7)

- You assume %50 of the traffic happens in the 4 hours of afternoon, and the rest 20 hours is similar.

100,000 * 0.8 * 0.5 / ( 4 hours * 60 mins ) = 16 requests per minuteon the average of peak hours.- Let’s quadruple the amount so that we know that in minutes that we have peak requests, we can still process.

→ So in peak times we have 64 requests.

You fine-tuned DeepSeek R1:8B and you seek for GPU that can inference.

- 🧪 You use DeepSeek R1:8B

- 🔢 Q8 quantization is OK for you.

- 📦 You want to inference one request (one batch size)

I’ve found an amazing website that does realistic calculations of VRAM/GPU needs per model, it’s an amazing resource I discovered today. So you need to experiment over here:

👉 Can You Run This LLM? VRAM Calculator

Let’s say you choose RTX 4060 (16 GB) with 1 GPU.

- 🔄 On peak times, you have 64 requests.

- 🧾 Max tokens per input, sequence length is 1125 inputs. Input size affects time-to-first-token speed.

- ⚙️ Set number of GPUs to 1, we can increase if calculations show it’s not enough.

- 👥 Set concurrent users to 1, we will play with it to see if we can process peak demand.

Website (kudos to them, those seem realistic with my experiments) says:

“Generation Speed: ~51 tok/sec per single user.”

However, we know that:

- ⏱️ On peak request, we expect 64 request per minute.

- Which implies we need to end API calls at 0.93 seconds.

- 🧠 We generate 187.5 Output tokens per request which takes 3.67 seconds to process single request.

- 📉 Also with number of concurrent users, the token per second will still be lowered!

So this implies, the GPU is not enough! What to do now?

- 🪶 Try Q4 quantization or smaller if model quality doesn’t drop!

- 🖥️ Rent a bigger GPU, it seems 2x RTX 6000 gives ~188 tok/sec.

- 🧩 Go with multi-GPU solution, but it’ll have an engineering cost! I have never done that honestly :)

- ⚗️ Fine-tune a smaller model, fine-tuning is not that hard to experiment!

- 🤹 Go with batching on peak times, batch process 2 users, but it’ll have an engineering cost! Again I have never done that honestly :)

- 🌥️ If you still can use public API, use public API on peak times! A suitable GPU already handles most of the peak-ish demand, instead of trying to implement multi-GPU just use public API on overload times, you need to write backend of that.

Also beware:

- 💸 Deploying/training LLMs will steal your human power, salaries cost too!

#### 3.3 Why output tokens are more expensive than input tokens?

This question has great answer in here and here.

Shortly: When a model encodes tokens, it processes all of them at once, which is efficient. But when generating (decoding), it predicts one token at a time in order. After predicting each token, it must update its calculations to predict the next one. This step-by-step process makes decoding slower and more expensive than encoding.

| Aspect | Input Tokens (Encoding) | Output Tokens (Decoding) |

|---|---|---|

| Compute (FLOPs) | Each input token is processed once | Each output token requires a full transformer pass |

| Scaling Behavior | Without KV cache, compute grows roughly quadratically with input length | With KV cache, compute grows linearly as output tokens increase |

| KV Cache Role | Built during encoding | Accessed and extended at every output generation step |

| Memory Bandwidth | Relatively low — data read sequentially | High — random memory access due to autoregressive steps |

| Latency Sensitivity | Tokens processed in parallel batches, so latency is low | Tokens generated one by one, so latency is critical |

| Number of Transformer Runs | One run per input token | One run per output token |

| Cost per Million Tokens | About $5 (e.g., GPT-4o) | About $15 (3× more expensive) |

| Main Cost Drivers | Mostly compute power (FLOPs) | Memory bandwidth, cache maintenance, and latency overhead |

- Compute cost per token is similar, thanks to KV cache (avoids recomputing keys/values).

- Output token generation is sequential, each step adds to memory and latency cost.

- KV cache grows with sequence length, increasing memory bandwidth needs.

- Memory bandwidth (not just memory size) is a bottleneck—especially at inference scale.

-

Pricing reflects practical engineering constraints (not just FLOPs), hence higher price for outputs.

- “With KV caching, it costs almost the same FLOPs to take 100 input tokens and generate 900 output tokens, or vice versa.” – leogao

- “The model has to run once per output token, with gradually growing context.” – mishka

- “Memory bandwidth becomes the bottleneck, especially for output token generation.” – Peter Chng

4. Explain the Temperature parameter and how to set it.

We have discussed that LLMs are next-word prediction machines. But LLMs doesnt magically come up with next word. It also creates possibilities for the next word. Next word can be some of the candidates, right? There are few ways to complete any sentence by still being in the same context.

“My cat is ….” beautiful? gorgeous? cute? evil? all and more is possible. But “gpu” can’t be there right?

| Word | Probability |

|---|---|

| beautiful | 0.35 |

| gorgeous | 0.25 |

| cute | 0.20 |

| evil | 0.15 |

| friendly | 0.04 |

| gpu | 0.01 |

LLM models assign probability to each of the possible next words.

- If we set temperature to 0, it means that always the most probable is selected. And sentence continues the way the next word shapes the meaning.

- If we set temperature to 0.8, it means that second or third most can be randomly selected. And sentence continues the way the next word shapes the meaning.

Creativity comes from choosing random words. Setting temperature to 0.8 increases likelihood to choose evil, and sentence becomes : “My cat is evil, I hate her”

Creativity comes from choosing random words. Setting temperature to 0 ensures to choose beautiful, and sentence becomes : “My cat is beautiful, I love her”

| Beautiful Illustration by Mastering LLM Blog |

But in practise:

- Choose low temperature when:

- You need consistent, reliable, and precise outputs

- The task requires factual accuracy or authoritative answers

- You want to build user trust by avoiding unpredictable results

- The application demands low cognitive load, so users get clear recommendations without confusion

- Minimizing user decision fatigue is important

- The domain is sensitive or high-stakes, where errors are costly (e.g., medical, legal)

- Choose high temperature when:

- Creativity, novelty, or variety is desired (e.g., brainstorming, storytelling)

- You want to keep users engaged and curious with unpredictable responses

- Encouraging users to explore different ideas or prompt refinements is beneficial

- Trade-offs to consider:

- Low temperature can make the model seem more confident but may cause users to over-trust incorrect answers

- High temperature increases creativity but can lead to incoherent or irrelevant responses

- High temperature may cause decision fatigue if users are overwhelmed by too many options

- Low temperature might make the experience feel stale or repetitive over time

- Summary:

- Use low temperature for reliability and clarity

- Use high temperature for creativity and engagement

- Always consider user expectations, context, and product goals when deciding

5. Explain the basic structure of prompt engineering

Note : I may need to add more content to this section.

Read everything here to master

Before diving in depth, the structure below for me has been very beneficial on my 5k MAU app, and 2 POCs. Although you may have seen the illustration before and say “another AI Influencer’s content”, think twice.

| learnprompting.org |

The structure above we’ll discuss now is actually mostly used for apps that user expect to get single answer, non-conversational one.:

- Generate interview questions in some rule. -> Question is given to you and that’s it.

- Summarize the content with the neutral tone. -> Content is summarised and is given to you.

However, if you are developing a conversational app, you should consider:

- Rules are tend to be forgiven when number of messages increases.

- Users can try to jailbreak-hijack.

- If conversation takes long (generally happens when you paste some text/document to conversations), you are exceeding the context lenght of the model and your “command prompts” at the beginning are excluded to fit into the context lenght within the FIFO (First in First Out) principle.

Let’s go for the cases mentioned, illustration is very helpful.

5.1 Role Prompting

Role is to define what should AI consider itself while responding to you. It can either be historian, or a harsh interviewer that asks hard questions, or a kind call-person.

! Never forget, it’s the first thing your AI reads! Although in practise, you may see that commanding in random places still works, it’s proven that where you command/type important context is important.

Lost in the Middle: How Language Models Use Long Contexts and a good summary of paper

LLMs Get Lost in Multi-turn Conversation and a good summary of paper

Defining the role/mood of your AI would prepare it to style/how to think about the subject.

Let’s see this on qwen:1-7B

USER: you are expert interviewer. what would you ask to product manager in interview?

ASSISTANT:

Okay, I need to figure out what questions an expert interviewer would ask a product manager during an interview. Let me start by recalling what I know about product management interviews. Product managers are responsible for defining product goals, managing the product lifecycle, and ensuring alignment ....so many things... First, I should consider the different aspects of a product manager's role....* I remember that interviews often start with a greeting and an introduction. * ...

ASSISTANT: Hi, welcome to the interview…..

In the middle of thinking, AI realised that expert interviews greet people.

While if you do not assign role by saying * you are expert interviewer *, AI jumps directly to the question:

ASSISTANT:

... For example, instead of "What do you think about product management?" they should ask "How do you approach defining the product strategy for a new feature?" ...

So, in the first paragraph, you should define the sufficient:

- Role

- Mindset

- Tone

- Style

- Behavior

- Goal

- Audience

- Constraints

5.2 Instruction Prompt

You’ve assigned the role to the AI, then you should constraint it to focus on specific task within its responsibility.

In this section, you should be specific and command AI what to do, directly starting with: Specify, Summarize, Translate, Do, Classify, Divide, Distinguish

Then, you should continue with the action point in general.

USER: Translate the given text in Turkish, considering the localisation of the synonyms in the Turkish language, as if the direct translation of synonyms are meaningful for the target language.

You should also consider markdown tags while defining the rules, it has been observed it works:

/## General Rules

- Try to match with the same number of words as the translation shouldn’t increase the number of pages.

/## Avoid

- Never translate technical words in article, duckDB is not ordekDB in Turkish!

5.3 Defining Examples

I’ve built 3 big apps with LLMs and RAG. I can assure you that good examples are 10 times better than long instruction prompting. Whatever you define in instructions, after some context AI either won’t follow it or get bogged down in details. Good examples would even work even if you don’t have any instruction prompt.

You need to define good examples on:

- What to do (that’s for sure)

- What NOT to do

Like

### ✅ Good Example:

Q: Can you explain self-attention in Transformers?

A: Sure! Think of each word looking at every other word and asking: “Should I care about you?”...

### ❌ Bad Example:

A: Self-attention is a mechanism that allows each token to... [copy-pasted Wikipedia-level dump]...

If you would like to get a json output, by using ==JSON Mode== or ==Structured Outputs==, you should define the response format in prompt:

{

"title": "The Rise of Transformers in NLP",

"author": "Jane Doe",

"main_points": [

"Transformers revolutionized NLP by using self-attention.",

"They enable parallel processing of tokens.",

"They have become the backbone of modern language models."

]

}

or

{

"title": str title of movie,

"author": str author,

"main_points": List of str of 3-4 words explanation of main points

}

or

in OpenAI python:

from pydantic import BaseModel, Field

class MovieSummary(BaseModel):

title: str = Field(..., description="Title of the movie")

author: str = Field(..., description="Author of the movie or article")

main_points: List[str] = Field(

...,

description="List of main points (each 3-4 words) summarizing the content"

)

OpenAI reads descriptions from here, too! I’ve benefited a lot.

6. Explain in-context learning

Language Models are Few-Shot Learners

AI can’t be trained with the fresh data everyday. It’s just impossible. But AI has its intelligence, so that it can interpret the given data. RAG, retrieval augmented generation uses the principle that pipelines bring data to the prompt, so that your AI being informed about it.

Continue the following story fragment in the style of Shakespearean English:

Story start: "The sun dipped below the horizon, casting a golden glow on the ancient castle."

Continuation:

Lo, the amber light did kiss yon aged stones,

Where whispered secrets dwell in shadowed tones.

Then, AI will follow what you’ve trained.

! TBH I am ashamed to explain this, nowadays we need to know these gatekeeping names unfortunately :/

What distinguish the ICL?

- No Parameter Updates: The model’s weights remain fixed; learning happens through the prompt context alone.

- Transient Knowledge: The knowledge gained is temporary and specific to the current prompt; it is not stored permanently.

- Few-Shot Learning: Often called few-shot or few-shot prompting, as the model requires only a handful of examples to generalize the task.

- Leverages Pretraining: Better model, better ICL.

7. Explain types of prompt engineering

PromptingGuide AI and this Digital Adoption Blog have the best content on the types of prompting. So my resources would mostly relay on them.

|

|---|

| Types of Prompts Engineering by Digital Adoption |

7.1 Zero Shot Prompting

You trust the LLM, and ask for your task.

USER : Draft a cold email introducing our new SaaS product to potential clients.

ASSISTANT : Here it is: ….

7.2 Few-Shot Prompting

You also explain what to do with few examples. I remember the days where professors in Bilkent University studying few-shot prompting in 2018-19, Gokberk Cinbis and the other lady. I was thinking, “it’s impossible” that day.

USER : Draft a cold email introducing our new SaaS product to potential clients. It should look like : Subject: Unlock Efficiency and Growth with [Your SaaS Product Name] 🚀 Hi [First Name],….

ASSISTANT : Here it is that looks like the examples: ….

or you can add what to do and not to do:

USER : This is awesome! // negative USER : This is promising! // positive

ASSISTANT : … The attachment looks promising …

7.3 Prompt Chaining

It’s beneficial when you want AI to ingest/preprocess the portion of data, then apply another process(es) for final results.

It’s mostly used in Document QA or retrieval-based pipelines.

placeholder_for_statements = get_statements_from_db(id=[123,32,124,523])

messages = []

initial_prompt = [

{"role":"user", "content":f"Decide which statements is related to company's financials, rank them. {placeholder_for_statements},

]"

ai_response = ai.response(initial_prompt)

chain = [

initial_prompt,

ai_response,

{"role":"user", "content":"Write a summary of first two documents. ...Assume you add tons of instructions there as AI will focus on better in here, because documents are long and we know that AI hallucinates/get missed in context if we dump everything to single prompt as we discussed before...."]

final_response = ai.response(chain)

7.4 Chain of Thought (COT) Prompting

The simple idea of the prompt inspires huge performance boost for most of LLM models, especially Deepseek.

What we try to achieve AI here is to let it think, define steps, and try to solve; rather than letting it to solve the problem in first attempt(single prompt).

SYSTEM: You are a helpful assistant that solves problems by explaining each step before giving the final answer.

USER: If you have 4 pens and buy 3 more, how many pens do you have in total?

ASSISTANT: Let’s think step-by-step:

- You start with 4 pens.

- You buy 3 more pens.

- Adding them together: 4 + 3 = 7.

Answer: 7

8. What is hallucination, and how can it be controlled using prompt engineering?

Hallucinations are false, fabricated, or inconsistent outputs generated by LLMs that do not align with the input or real-world facts here, here, here, here.

Reasons:

- Training data biases and gaps

- Limited contextual understanding of models

- Incomplete or misleading prompts

- Model architecture and stochastic decoding flaws here, here, here.

Examples:

- Factual inaccuracies: The model might say something like “The Eiffel Tower is in Berlin” or “Shakespeare was born in 1609”, which are simply wrong facts.

- Nonsensical statements: It might generate sentences like “I love winter, but I hate cold weather” or “The cat barked loudly at the mailman,” which don’t make sense logically or factually.

- Context or instruction mismatches: For example, if you ask for a summary of a book, it might start talking about the author’s biography instead of the plot. Or it may ignore a request to write in a formal tone and respond casually.

- Logical inconsistencies: The model might say “If all dogs are animals and some animals are cats, then some dogs are cats,” which is a flawed conclusion based on faulty logic.

here

How to detect:

- Analyzing prompts for ambiguity

- Consistency and cross-checks within generated content

- External fact verification using knowledge bases or APIs here

How to reduce or eliminate hallucinations:

- Curate and improve training data quality

- Fine-tune models with domain-specific data

- Use prompt engineering

- Use low temperature to control chaos

- Combine with retrieval-augmented generation (RAG) to ground outputs in verified external knowledge here

Role of Retrieval-Augmented Generation (RAG):

- Learns in-context, so it bases off the response to the recent info it has

- Integrates external factual databases during generation

- Grounds outputs in real data, significantly reducing hallucinations here

Fully eliminating hallucinations is not currently possible due to inherent model limitations and learning constraints here

9. What are some aspects to keep in mind while using few-shot prompting?

TBC.

10. What are certain strategies to write good prompts?

TBC.

11. How to improve the reasoning ability of LLM through prompt engineering?

TBC.

12. How to improve LLM reasoning if your Chain-of-Thought (COT) prompt fails?

TBC.

Side Notes:

- %90 of the blog is/will be written by me.

- I may use ChatGPT to create %10 of the blogs, just to make content easier to read/to markdown format the algorithms. But nothing more than this. Just for help.

- This doesn’t mean I create posts with ChatGPT, and proof-read and additions. It means I create posts by myself, in the middle I found ChatGPT can graph/format algorithm better than me, and prompt what I want to explain to it. Then it gives better format/fun to read content for just some part of the blog.

- The reason is, I already spend time to create content, and I’m learning while writing, but I understand content before writing, so I want to minimize writing time.